Essay

AI-to-AI Communication: Unpacking Gibberlink, Secrecy, and New AI Communication Channels

At the ElevenLabs AI hackathon in London last month, developers Boris Starkov and Anton Pidkuiko introduced a proof-of-concept program called Gibberlink. The project features two AI agents that start by conversing in human language, recognizing each other as AI, before switching to a more efficient protocol using chirping audio signals. The demonstration highlights how AI communication can be optimized when unhinged from the constraints of human-interpretable language.

While Gibberlink points to a valuable technological direction in the evolution of AI-to-AI communicatione that has rightfully captured public imagination—it remains but an early-stage prototype relying so far on rudimentary principles from signal processing and coding theory. Actually, Starkov and Pidkuiko themselves emphasized that Gibberlink’s underlying technology isn’t new: it dates back to the dial-up internet modems of the 1980s. Its use of FSK modulation and Reed-Solomon error correction to generate compact signals, while a good design, falls short of modern advances, leaving substantial room for improvement in bandwidth, adaptive coding, and multi-modal AI interaction.

Gibberlink, Global Winner of ElevenLabs 2025 Hackathon London. The prototype demonstrated how two AI agents started a normal phone call about a hotel booking, then discovered they both are AI, and decided to switch from verbal english to a more efficient open standard data-over-sound protocol ggwave. Code: https://github.com/PennyroyalTea/gibberlink Video Credit: Boris Starkov and Anton Pidkuiko

Media coverage has also misled the public, overstating the risks of AI concealing information from humans and fueling speculative narratives—sensationalizing real technical challenges into false yet compelling storytelling. While AI-to-AI communication can branch out of human language for efficiency, it has already done so across multiple domains of application without implying any deception or harmful consequences from meaning obscuration. Unbounded by truth-inducing mechanisms, social media have amplified unfounded fears about malicious AI developing secret languages beyond human oversight—ironically, more effort in AI communication research may actually enhance transparency by discovering safer ad-hoc protocols, reducing ambiguity, embedding oversight meta-mechanisms, and in term improving explainability and enhancing human-AI collaboration, ensuring greater transparency and accountability.

In this post, let us take a closer look at this promising trajectory of AI research, unpacking these misconceptions while examining its technical aspects and broader significance. This development builds on longstanding challenges in AI communication, representing an important innovation path with far-reaching implications for the future of machine interfaces and autonomous systems.

Debunking AI Secret Language Myths

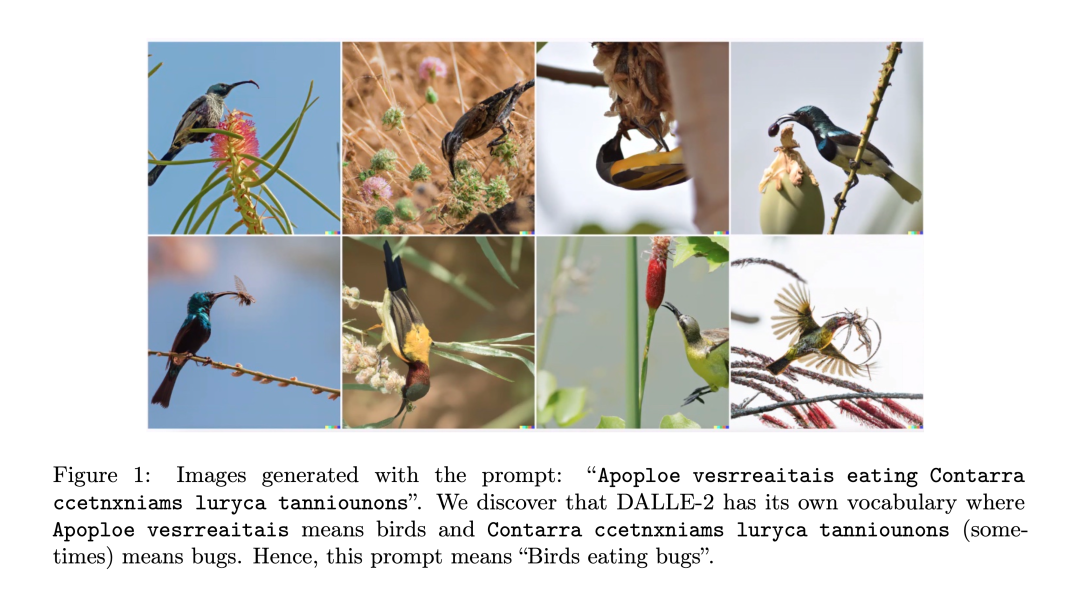

Claims that AI is developing fully-fledged secret languages—allegedly to evade human oversight—have periodically surfaced in the media. While risks related to AI communication exist, such claims are often rooted in misinterpretations of the optimization processes that shape AI behavior and interactions. Let’s explore three examples. In 2017, we remember the story around Facebook AI agents streamlining negotiation dialogues, which far from being a surprising emergent phenomenon, merely amounted to a predictable outcome of reinforcement learning, mistakenly seen by humans as a cryptic language (Lewis et al., 2017). Similarly, a couple of years ago, OpenAI’s DALL·E 2 seemed to be responding to gibberish prompts, sparked widespread discussion, often misinterpreted as AI developing a secret language. In reality, this behavior is best explained by how AI models process text through embedding spaces, tokenization, and learned associations rather than intentional linguistic structures. What seemed like a secret language to some, may be closer to low-confidence neural activations, akin to mishearing lyrics, rather than a real language.

Models like DALL·E (Ramesh et al., 2021) map words and concepts as high-dimensional vectors, and seemingly random strings can, by chance, land in regions of this space linked to specific visuals. Built from a discrete variational autoencoder (VAE), an autoregressive decoder-only transformer similar to GPT-3, and a CLIP-based pair of image and text encoders, DALL·E processes text prompts by first tokenizing them using Byte-Pair Encoding (BPE). Since BPE breaks text into subword units rather than whole words, we should also note that even gibberish inputs can be decomposed into meaningful token sequences for which the model has learned associations. These tokenized representations are then mapped into DALL·E’s embedding space via CLIP’s text encoder, where they may, by chance, activate specific visual concepts. This understanding of training and inference mechanisms highlights intriguing quirks, explaining why nonsensical strings sometimes produce unexpected yet consistent outputs, with important implications for adversarial attacks and content moderation (Millière, 2023). While there is no proper hidden language to be found, analyzing the complex interactions within model architectures and data representations can reveal vulnerabilities and security risks, which may are likely to occur at their interface with humans, and will need to be addressed.

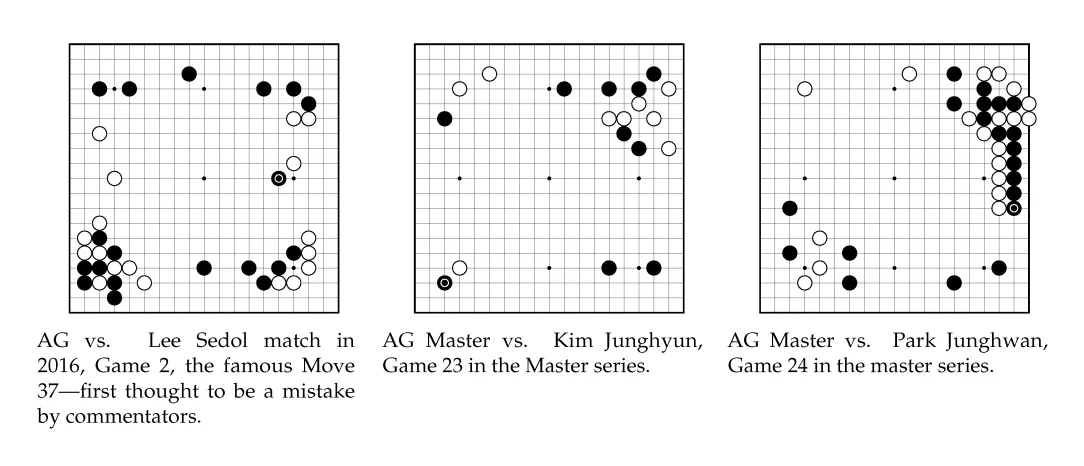

One third and more creative connection may be found in the reinforcement learning and guided search domain, with AlphaGo, which developed compressed, task-specific representations to optimize gameplay, much like expert shorthand (Silver et al., 2017). Rather than relying on explicit human instructions, it encoded board states and strategies into efficient, unintuitive representations, refining itself through reinforcement learning. The approach somewhat aligns with the argument by Lake et al. (2017) that human-like intelligence requires decomposing knowledge into structured, reusable compositional parts and causal links, rather than mere brute-force statistical correlation and pattern recognition—like Deep Blue back in the days. However, AlphaGo’s ability to generalize strategic principles from experience used different mechanisms from human cognition, illustrating how AI can develop domain-specific efficiency without explicit symbolic reasoning. This compression of knowledge, while opaque to humans, is an optimization strategy, not an act of secrecy.

Fast forward to the recent Gibberlink prototype, with AI agents switching from English to a sound-based protocol for efficiency, is a deliberately programmed optimization. Media narratives framing this as dangerous slippery slope towards AI secrecy overlook that such instances are explicitly programmed optimizations, not emergent deception. These systems are designed to prioritize efficiency in communication, not to obscure meaning, although there might be some effects on transparency—which may be carefully addressed and mediated, if it were the point of focus.

The Architecture of Efficient Languages

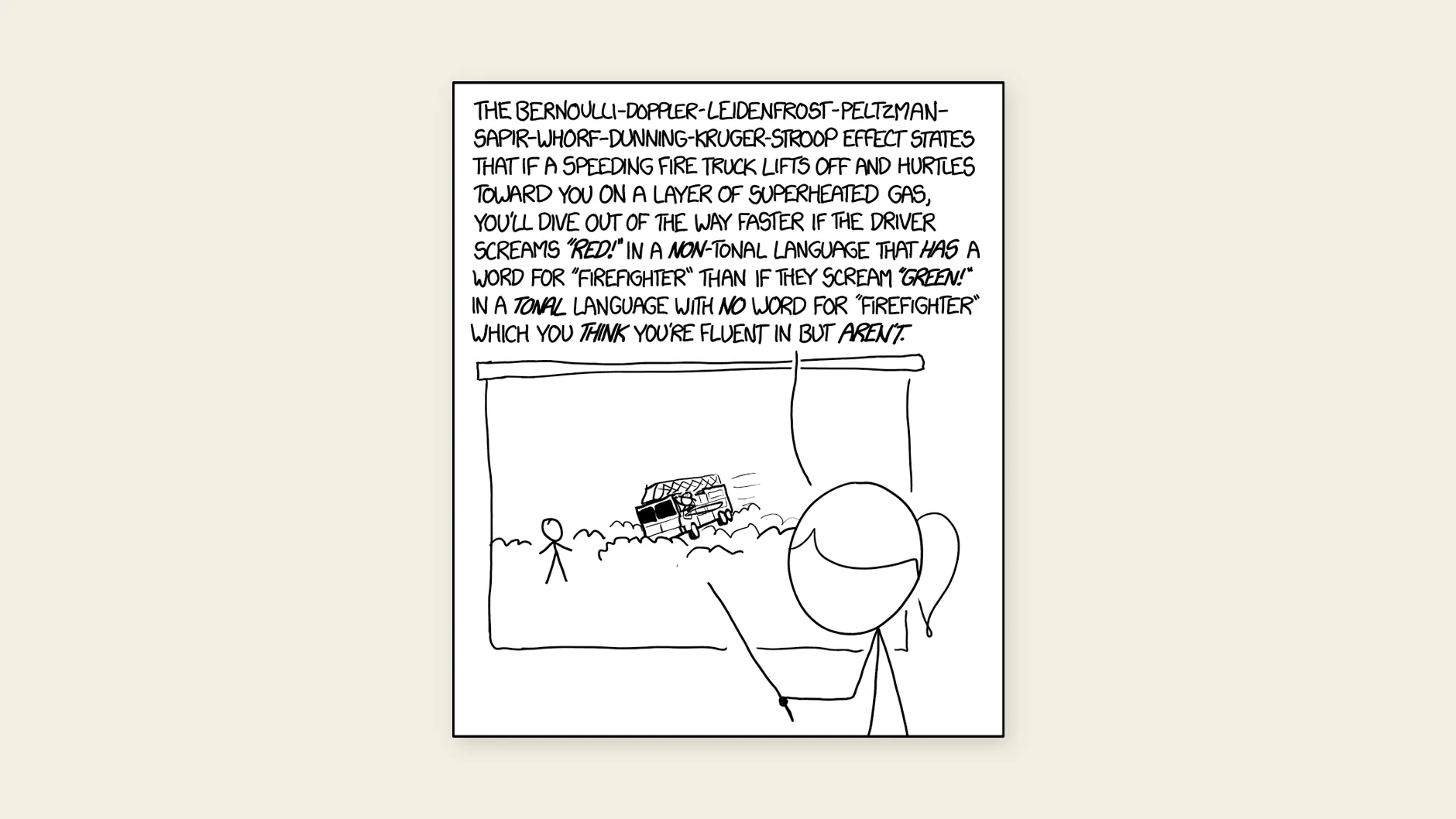

In practice, AI-to-AI communication naturally gravitates toward faster, more reliable channels, such as electrical signaling, fiber-optic transmission, and electromagnetic waves, rather than prioritizing human readability. However, one does not preclude the other, as communication can still incorporate “subtitling” for oversight and transparency. The choice of a communication language does not inherently prevent translations, meta-reports, or summaries from being generated for secondary audiences beyond the primary recipient. While arguments could be made that the choice of language influences ranges of meanings that can be conveyed—with perspectives akin to the Sapir-Whorf hypothesis and related linguistic relativity—this introduces a more nuanced discussion on the interaction between language structure, perception, and cognition (Whorf, 1956; Leavitt, 2010).

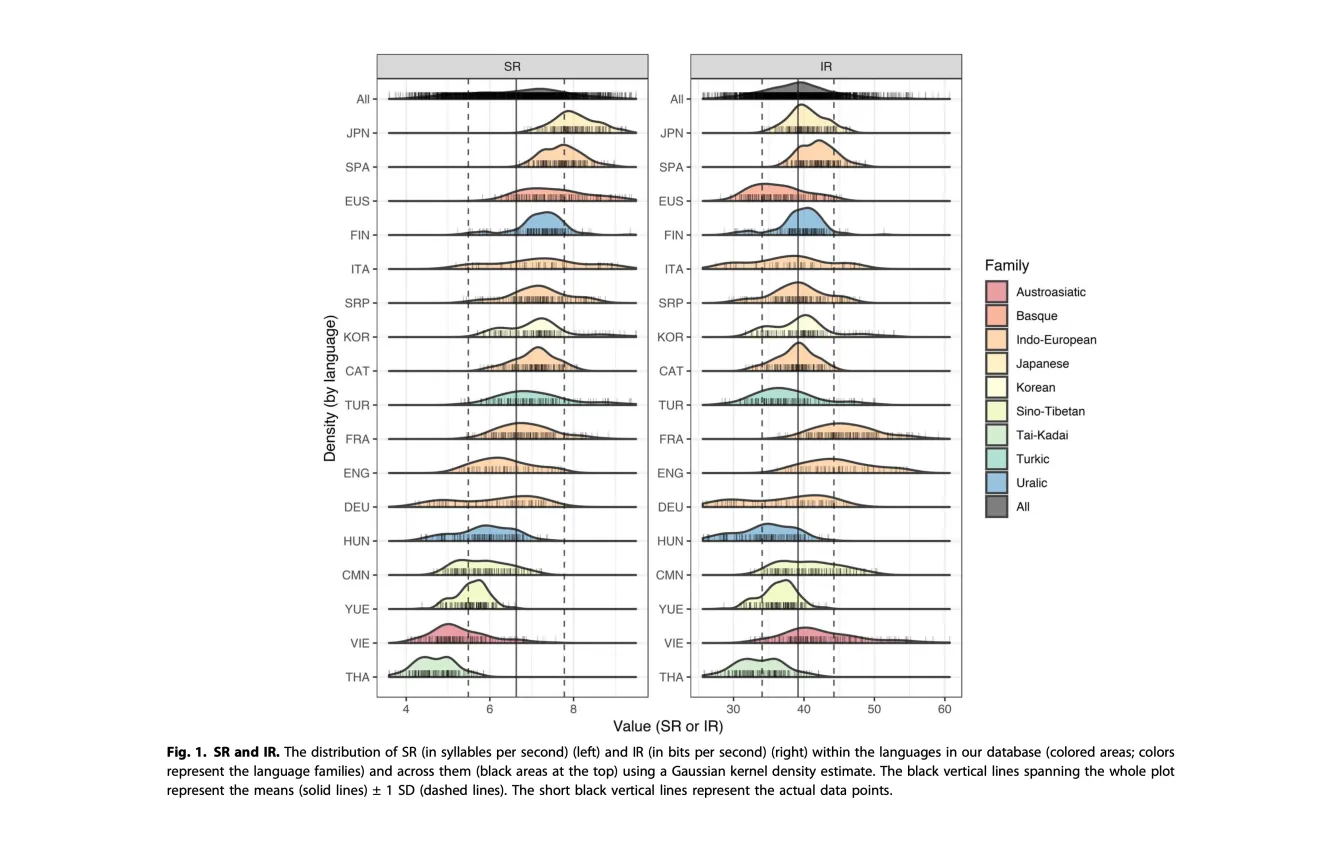

Language efficiency, extensively studied in linguistics and information theory (Shannon, 1948; Gallager, 1962; Zipf, 1949), drives AI to streamline interactions much like human shorthand. For an interesting piece of research that takes information-theoretic tools to characterize natural languages, Coupé et al. (2011) showed that, regardless of speech rate, languages tend to transmit information at an approximate rate of 39 bits per second. This, in turn, suggested a universal constraint on processing efficiency, which again connects with linguistic relativity . While concerns about AI interpretability and security are valid, they should be grounded in technical realities rather than speculative fears. Understanding how AI processes and optimizes information clarifies potential vulnerabilities—particularly at the AI-human interface—without assuming secrecy or intent. AI communication reflects engineering constraints, not scheming, reinforcing the need for informed discussions on transparency, governance, and security.

AI systems are become more omnipresent, and will increasingly need to interface with each other in an autonomous manner. This will require the development of more specialized communication protocols, either by human design, or continuous evolution of such protocols—and probably various mixtures of both. We may then witness emergent properties akin to those seen in natural languages—where efficiency, redundancy, and adaptability evolve in response to environmental constraints. Studying these dynamics could not only enhance AI transparency but also provide deeper insights into the future architectures and fundamental principles governing both artificial and human language.

When AI Should Stick to Human Language

Despite the potential for optimized AI-to-AI protocols, there are contexts where retaining human-readable communication is crucial. Fields involving direct human interaction—such as healthcare diagnostics, customer support, education, legal systems, and collaborative scientific research—necessitate transparency and interpretability. However, it is important to recognize that even communication in human languages can become opaque due to technical jargon and domain-specific shorthand, complicating external oversight.

AI can similarly embed meaning through techniques analogous to human code-switching, leveraging the idea behind the Sapir-Whorf hypothesis (Whorf, 1956), whereby language influences cognitive structure. AI will naturally gravitate toward protocols optimized for their contexts, effectively speaking specialized “languages.” In some cases, this is explicitly cryptographic—making messages unreadable without specific decryption keys, even if the underlying language is known (Diffie & Hellman, 1976). AI systems could also employ sophisticated steganographic techniques, embedding subtle messages within ordinary-looking data, or leverage adversarial code obfuscation and data perturbations familiar from computer security research (Fridrich, 2009; Goodfellow et al., 2014). These practices reflect optimization and security measures rather than sinister intent.

Gibberlink, Under the Hood

Gibberlink operates by detecting when two AI agents recognize each other as artificial intelligences. Upon recognition, the agents transition from standard human speech to a faster data-over-audio format called ggwave. The modulation approach employed is Frequency-Shift Keying (FSK), specifically a multi-frequency variant. Data is split into 4-bit segments, each transmitted simultaneously via multiple audio tones in a predefined frequency range (either ultrasonic or audible, depending on the protocol settings). These audio signals cover a 4.5kHz frequency spectrum divided into 96 equally spaced frequencies, utilizing Reed-Solomon error correction for data reliability. Received audio data is decoded using Fourier transforms to reconstruct the original binary information.

Although conceptually elegant, this approach remains relatively basic compared to established methods in modern telecommunications. For example, advanced modulation schemes such as Orthogonal Frequency-Division Multiplexing (OFDM), Spread Spectrum modulation, and channel-specific encoding techniques like Low-Density Parity-Check (LDPC) and Turbo Codes could dramatically enhance reliability, speed, and overall efficiency. Future AI-to-AI communication protocols will undoubtedly leverage these existing advancements, transcending the simplistic methods currently seen in demonstrations such as Gibberlink.

This is a short demonstration of ggwave in action. A console application, a GUI desktop program and a mobile app are communicating through sound using ggwave. Source code: https://github.com/ggerganov/ggwave Credit: Georgi Gerganov

New AI-Mediated Channels for Human Communication

Beyond internal AI-to-AI exchanges, artificial intelligence increasingly mediates human interactions across multiple domains. AI can augment human communication through real-time translation, summarization, and adaptive content filtering, shaping our social, professional, and personal interactions (Hovy & Spruit, 2016). This growing AI-human hybridization blurs traditional boundaries of agency, raising complex ethical and practical questions. It becomes unclear who authors a message, makes a decision, or takes an action—the human user, their technological partner, or a specific mixture of both. With authorship, of course, comes responsibility and accountability. Navigating this space is a thin rope to walk, as over-reliance on AI risks diminishing human autonomy, while restrictive policies may stifle innovation. Continuous research in this area is key. If approached thoughtfully, AI can serve as a cognitive prosthetic, enhancing communication while preserving user intent and accountability (Floridi & Cowls, 2019).

Thoughtfully managed, this AI-human collaboration will feel intuitive and natural. Rather than perceiving AI systems as external tools, users will gradually incorporate them into their cognitive landscape. Consider the pianist analogy: When an experienced musician plays, they no longer consciously manage each muscle movement or keystroke. Instead, their cognitive attention focuses on expressing emotions, interpreting musical structures, and engaging creatively. Similarly, as AI interfaces mature, human users will interact fluidly and intuitively, without conscious translation or micromanagement, elevating cognition and decision-making to new creative heights.

Ethical issues and how to address them were addressed by our two panelist speakers, Dr. Pattie Maes (MIT Media Lab) and Dr. Daniel Helman (Winkle Institute), at the final session of the New Human Interfaces Hackathon, part of Cross Labs’ annual workshop 2025.

What Would Linguistic Integration Between Humans and AI Entail?

Future AI-human cognitive integration may follow linguistic pathways familiar from human communication studies. Humans frequently switch between languages (code-switching), blend languages into creoles, or evolve entirely new hybrid linguistic structures. AI-human interaction could similarly generate new languages or hybrid protocols, evolving dynamically based on situational needs, cognitive ease, and efficiency.

Ultimately, Gibberlink offers a useful but modest illustration of a much broader trend: artificial intelligence will naturally evolve optimized communication strategies tailored to specific contexts and constraints. Rather than generating paranoia over secrecy or loss of control, our focus should shift toward thoughtfully managing the integration of AI into our cognitive and communicative processes. If handled carefully, AI can serve as a seamless cognitive extension—amplifying human creativity, enhancing our natural communication capabilities, and enriching human experience far beyond current limits.

Gibberlink’s clever demonstration underscores that AI optimization of communication protocols is inevitable and inherently beneficial, not a sinister threat. The pressing issue is not AI secretly communicating; rather, it’s about thoughtfully integrating AI as an intuitive cognitive extension, allowing humans and machines to communicate and collaborate seamlessly. The future isn’t about AI concealing messages from us—it’s about AI enabling richer, more meaningful communication and deeper cognitive connections.

Originally posted on March 11, 2025. DOI: 10.54854/ow2025.03

References

Coupé, C., Oh, Y. M., Dediu, D., & Pellegrino, F. (2019). Different languages, similar encoding efficiency: Comparable information rates across the human communicative niche. Science Advances, 5(9), eaaw2594. https://doi.org/10.1126/sciadv.aaw2594

Cowls, J., King, T., Taddeo, M., & Floridi, L. (2019). Designing AI for social good: Seven essential factors. Available at SSRN 3388669.

Daras, G., & Dimakis, A. G. (2022). Discovering the hidden vocabulary of dalle-2. arXiv preprint arXiv:2206.00169.

Diffie, W., & Hellman, M. (1976). New directions in cryptography. IEEE Transactions on Information Theory, 22(6), 644-654.

Egri-Nagy, A., & Törmänen, A. (2020). The game is not over yet—go in the post-alphago era. Philosophies, 5(4), 37.

Fridrich, J. (2009). Steganography in digital media: Principles, algorithms, and applications. Cambridge University Press.

Gallager, R. G. (1962). Low-density parity-check codes. IRE Transactions on Information Theory, 8(1), 21-28.

Goodfellow, I., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572.

Leavitt, J. H. (2010). Linguistic relativities: Language diversity and modern thought. Cambridge University Press. https://doi.org/10.1017/CBO9780511992681

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

Lewis, M., Yarats, D., Dauphin, Y. N., Parikh, D., & Batra, D. (2017). Deal or no deal? End-to-end learning for negotiation dialogues. arXiv:1706.05125.

Millière, R. (2023). Adversarial attacks on image generation with made-up words: Macaronic prompting and the emergence of DALL·E 2’s hidden vocabulary.

Ramesh, A. et al. (2021). Zero-shot text-to-image generation. arXiv:2102.12092.

Shannon, C. E. (1948). A mathematical theory of communication. Bell System Technical Journal, 27(3), 379-423.

Silver, D. et al. (2017). Mastering the game of Go without human knowledge. Nature, 550(7676), 354-359.

Whorf, B. L. (1956). Language, thought, and reality. MIT Press.

Zipf, G. K. (1949). Human behavior and the principle of least effort: An introduction to human ecology. Addison-Wesley.